Migrating Pega PRPC applications to containers and Kubernetes

This article is intended to guide on what you should consider and how you should plan to migrate or deploy your Pega application in a container platform (Kubernetes or any other container orchestration platform).

This article assumes that you are in any one of the below stages with your Pega application journey

* You are already operating your Pega application in a cloud environment, and now you want to containerize and run in a container platform,

* or you have already made your mind to deploy your existing Pega application (running in on-premises bare metal server) in a container environment. (after detailed analysis/ground work).

* You are starting a new Pega project/application and from day one you want to run in container as part of the Pega cloud journey.This article tries to cover strategies, consideration and planning of containerization of the application and App server component of a Pega platform , and it does not cover below

* Benefits of moving to container.

* Strategy for DB migration to cloud.

* Strategy for choosing cloud platform (private cloud/ public cloud, vendor, platform, etc).

* Why and whether you choose Pega in bare-metal cloud computing engine vs container platform.

First, make sure your decision to containerize an existing monolithic Pega application and operate it with Container platform/Kubernetes is a sound choice. Don’t do it just because you can or you want, Migrate existing Pega applications into containers with a long-term vision in mind. Moving to containers and Kubernetes just for the sake of doing it can actually introduce a lot more scope and technical challenges, depending on the applications and the teams running them.

Migration Phases

Every migration is different; however, they all typically follow the same high-level process. Below are typical phases to consider when you are in the journey for containerization of your Pega application.

Platform Options

When you are thinking of deploying your Pega application on a container platform, then choosing Kubernetes based container platform will be a wise choice as Pega supports industry wide acceptance of kubernetes (k8s) as the standard container orchestration platform.

You can manage Pega applications on top below supported cloud Kubernetes services:

Infrastructure-as-a-Service :

^ AWS Elastic Kubernetes Service (EKS)

^ Microsoft Azure Kubernetes Service (AKS)

^ Google Kubernetes Engine (GKE)

Platform-as-a-Service :

^ VMware Tanzu (Enterprise PKS)

^ RedHat OpenShiftt

Now within Kubernetes based container platforms, which one you want to choose that depends on different factors like below

- Availability of internal resources with Kubernetes expertise— You may not have the internal resources to ensure that Kubernetes installations, troubleshooting, deployment management, upgrades, monitoring, and ongoing operations won’t end up causing significant business disruption and cost increases — especially the need for more skilled personnel.

If that’s the case, consider opting for a SaaS-based managed Kubernetes service (AWA EKS, GCP GKE, Azure AKS). This is particularly helpful if you are struggling to keep up with the rate of change in the community and best practices for managing Kubernetes in production. - Security — If you have security considerations against going to the public cloud then using container platform like OpenShift or PKS in on-premises are the options. If you have expertise and effort to manage Kubernetes cluster yourself then installing Kubernetes in your own Server/VM also another option.

- Your current cloud vendor — Due to various reasons, like cost, usage, features, network, etc. , if you are already in a specific public cloud platform then choosing a Kubernetes service from that specific cloud vendor makes sense.

- Taking advantage of both worlds — Sometimes you have expertise and comfort with OpenShift, but still you want to take benefits of public cloud then you can install OpenShift Kubernetes cluster on top of AWS EC2 computing engine or on top of any other cloud vendors’ compute engine.

- DB migration strategy— Your DB migration strategy could be another factor while choosing which Kubernetes based container platform best suits your needs to host a Pega application. if you don’t want to move your DB into the cloud then choosing on-premises based container platform like OpenShift, PKS should be considered.

Once you have determined the use case and the team capabilities, no matter which cloud you choose to deploy for production-grade containerized workloads, you stick with a stable open-source release. Best of all, go with the industry standard for container orchestration: Kubernetes.

Leverage Docker, Kubernetes and Helm to manage Pega Infinity (TM) with modern cloud architecture principles. Manage Pega Infinity with automated deployment, scaling, and management of containerized applications. Customize processes with Elasticsearch, Cassandra / DSS, Traefik, or an EFK (Elasticsearch-Fluentd-Kibana) stack.

Associated Considerations/task/activities

While you are planning to host your Pega application as a container in a container platform, you have to consider many associated activities at different layers of the deployment at different stages of the lifecycle of the project.

Below are some of the typical activities which you should consider in your planning/journey. Below list is not an exhaustive list.

Reference Deployment Architecture (Baseline)

When we talk about deployment architecture for Pega application on container platform, we should consider 2 architectures for 2 different layers,

1. How to deploy a container platform itself.

2. How to deploy Pega platform on top of container platform.

Deployment architecture for Container platform :

What will be deployment architecture for container platform generally depends on below factors

— Which container platform your are choosing ( OpenShift/ PKS / EKS/ GKE/ etc.)

— Which base cloud hosting you are choosing ( private/public, Bare-Metal/AWS/GCP/Azure)

— Application Performance requirement (NFR)

— HA and fault tolerance requirement

— Usage pattern (Dev, QA, Prod environment)

Every vendors and platform providers have their own suggested deployment architecture, please refer their respective documentation for details.

For Pega PRPC, The following Kubernetes environments are supported

— Kubernetes

— OpenShift

— Amazon EKS Kubernetes

— Google Kubernetes Engine (GKE)

— VMware Enterprise Pivotal Container Service (PKS)

— Azure Kubernetes Service (AKS)

To know more details about choosing Kubernetes based Pega deployment, please visit below 2 links.

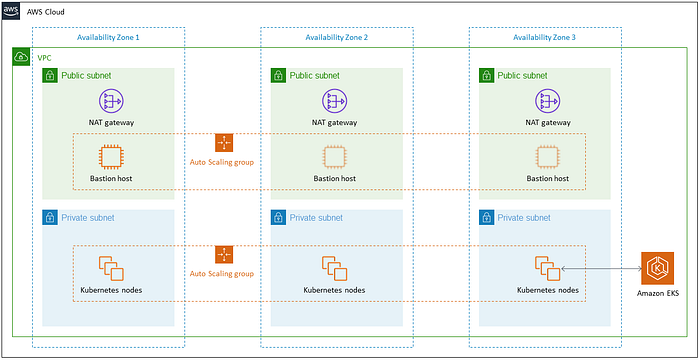

Below are some sample reference deployment architecture, 1st one for OpenShift in on-premises private cloud, 2nd diagram depict running OpenShift on top of AWS EC2, and 3rd one for AWS EKS

Reference 1: OpenShift Container platform

OpenShift Container Platform is a platform for developing and running containerized applications. It is designed to allow applications and the data centers that support them to expand from just a few machines and applications to thousands of machines that serve millions of clients.

With its foundation in Kubernetes, Its implementation in open RedHat technologies lets you extend your containerized applications beyond a single cloud to on-premise and multi-cloud environments.

For more details please refer below

https://docs.openshift.com/container-platform/3.4/architecture/index.html

Reference 2: OpenShift container platform on AWS

you can build the OpenShift Container Platform environment in the AWS Cloud, to get benefit of both the worlds.

For details on instance sizing and setup please refer below document

Reference 3: AWS EKS

Amazon EKS is a fully managed service that makes it easy to deploy, manage, and scale containerized applications using Kubernetes on AWS. Amazon EKS runs the Kubernetes control plane for you across multiple AWS availability zones to eliminate a single point of failure. Amazon EKS is certified Kubernetes conformant so you can use existing tooling and plugins from partners and the Kubernetes community. Visit aws.amazon.com/eks to learn more.

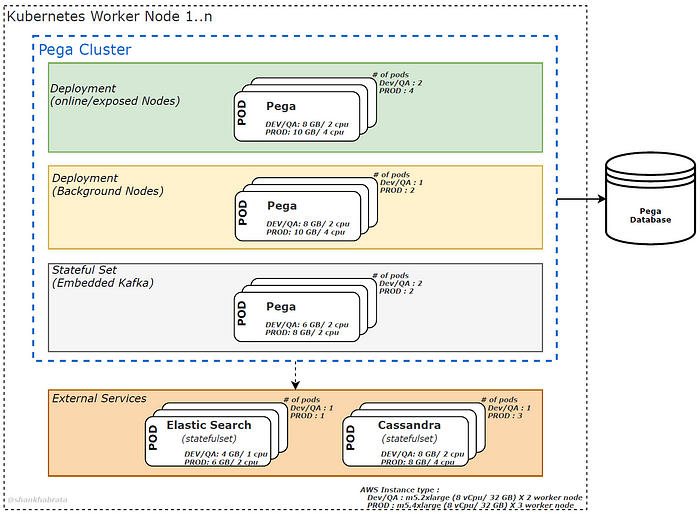

Deployment architecture for Pega platform:

When you are migrating your Pega application as container in a container platform, planning and sizing the deployment architecture is very important for the success of your application.

So work with Pegasystems or with your internal Pega architect for HW sizing based upon your application NFR, and then size your PODS and worker nodes of the container platform you are going to use to host your Pega application.

Don’t try to map or correlate pod/instance sizing of container platform with on-premises Pega installation or cloud based installation in bare-metal cloud computing resources.

Below is a typical and reference deployment architecture and sizing diagram for dev, QA, prod environment to host your Pega application. This needs to be fine tuned as per your HW sizing to support your application NFR.

Note:

— You can assign the the Webtier, Batch/background tier, Stream tier, external service tier to different dedicated and specific worker node using nodeName, NodeSelector, PodAffinity. and that is the best practice for PROD. but keep under same NameSpace.

— in Prod, you can Create a background tier for BIX processing, with 1 pod with specification as background/batch pod.

— in prod, Create a dedicated tier for real-time data grid processing. with 1 pod with specification as background/batch pod.

— POD and resources for addon services (elasticsearch, fluentd-elasticsearch, kibana, ingress loadbalancer) have not been shown, please plan and calculate appropriately.

— POD and resources for The backingservices like ‘Search and Reporting Service’ (SRS) have not been shown, that you can configure with one or more Pega deployments.

— sperate planning and sizing needed for storage (file/blob/ssd) , which is not shown in that diagram.

For more details please refer below links

Best Practices

Application Level best practices:

1. Don’t do it just because you can

Make sure your decision to containerize an existing monolithic Pega application and operate it with Container platform/Kubernetes is a sound choice. Don’t do it just because you can, Migrate existing Pega applications into containers with a long-term vision in mind. Moving to containers and Kubernetes just for the sake of doing it can actually introduce a lot more scope and technical challenges, depending on the applications and the teams running them.

2. Seriously consider refactoring your application

If you’re going to migrate a monolith to containers and run it with Kubernetes, do your due diligence on refactoring the application to maximize the benefits of such a move. Containers can be used for ‘lifting and shifting’ existing applications, but in order to enjoy their benefits, you should consider refactoring them.

Don’t think of it as moving to containers and Kubernetes; think of it as decomposing the application.

Run the Pega cloud readiness tool to access refactoring needs of your Pega application.

https://community.pega.com/marketplace/components/pega-cloud-readiness-tool

3. Make your Pega application cloud native

Consider whether to start with an older application and refactor, OR better to create a new application to get most of the cloud and container benefit. You may have newer applications which are easier to maintain and update than some of your older applications

Assess your current Pega application based on 12-factor-app benchmark and perform the refactoring to make your Pega application true cloud native, otherwise you may migrate your Pega application in the container but will not get the true benefit of container/cloud features.

4. Plan for observability, telemetry, and monitoring from the start

Make sure troubleshooting data is available if the container itself isn’t. It is very important to have your planning and set up for observability, telemetry, and monitoring in new application development for containers and Kubernetes.

If it wasn’t originally designed for containers, you’ll need to think about how you will troubleshoot issues when they arise. Don’t figure this out after you’ve containerized the application.

Many applications not designed for containers use local logs to store troubleshooting and other information. If the application goes down, operations folks will often log into the machine and look through the logs. In the container world, if the container is down there’s nothing to log into.

5. Create a migration framework

The more structure you can create for this process, the better. you should have a plan and strategy in place to iteratively modularize, containerize, and run at scale. If required, doing a Pilot will be a wise step.

6. Don’t blindly trust and reuse Pega images

Don’t make the mistake of having blind faith in Pega container images, especially not from a security perspective. If required, build your own image on top of Pega provided image keeping your compliance, security, logging in mind.

At the same time try to keep your container image as light as possible, Only include what you absolutely need.

And plan for hosting those images in your private image repository, with proper version control and tagging.

7. CI/CD and automation are critical for success and sustain

Automation is a critical characteristic of container orchestration; it should be a critical characteristic of virtually all aspects of building an application to be run in containers on Kubernetes. Otherwise, the operational burden can be overwhelming.

A well-conceived CI/CD pipeline is necessary for deploying, running and maintaining Pega application in Container platform.

Platform level best practices:

- Use liveliness and readiness checks.

- Do proper HW and POD sizing based on application NFR.

- Fine tune compute sizing and optimize via performance test

- Security and vulnerability checking

- Work out the enterprise integrations — These include single sign-on (SSO), authentication and authorization, monitoring, security, ACL governance risk compliance, and more.

- Don’t ignore your Database migration and associated strategies.

Operational best practices:

- Perform detail analysis before starting migration to container.

- Plan for a balanced team for migrating your Pega solution to Cloud/Container services. Sample roles are below:

- System administrator

- Application developer

- Network engineer

- Database administrator

- Project manager/program director

- Product owner/business owner

- Application architect

- Performance engineer - Plan and execute skilling of your resources on a container platform.

- Better to upgrade first for the version 5 & 6 application to 7 or 8 before migrating to container.

- Better not to associate other major activities (Pega upgrade, major functional change, new integration, etc.) along with container migration.

- Plan and practice DevoOps culture, only migrating to container will not bring full benefits.

Next steps

Containerizing your applications is only your first step toward taking advantages of the opportunities afforded by cloud computing. In my next article, we’ll discuss microservices, which provide a means by which you can decompose a monolithic application into smaller, more manageable, independently developed pieces.

Note: Opinions and approaches expressed in this article are solely my own and do not express the views or opinions of my employer, Pegasystems or any other organization.

Some of the product names, logos, brands, diagram are property of their respective owners

Please: Post your comments to express your view where you agree or disagree, and to provide suggestions.